Category: User Experience

AAAI 2018 – Presentation & Slides

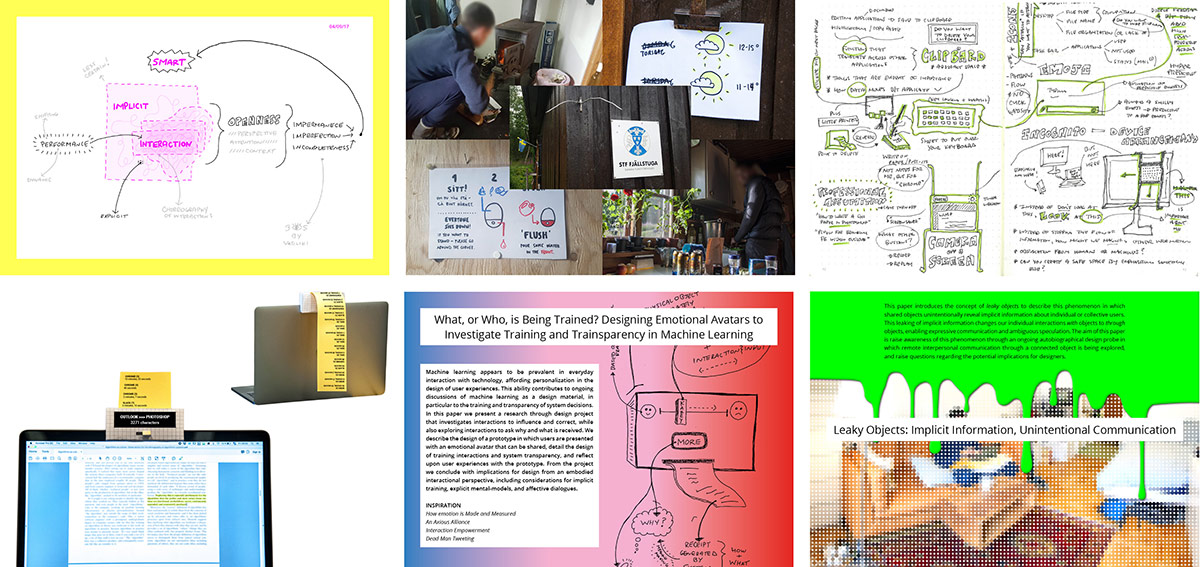

Last week I presented my paper Design Methods to Investigate User Experiences of Artificial Intelligence at the 2018 AAAI Spring Symposium on the User Experiences of Artificial Intelligence. The picture above was taken by a Google Clips camera, which was also one of the presented papers. Below are my slides, which contain supplementary images to my paper on the three design methods I have engaged with relative to the UX of AI. Another blog post will soon follow with reflections on other papers presented.

AAAI 2018 – Accepted Spring Symposia Papers

Two papers were accepted to the AAAI 2018 Spring Symposia: Design Methods to Investigate User Experiences of Artificial Intelligence for The UX of AI symposium and The Smart Data Layer for Artificial Intelligence for the Internet of Everything symposium. I’ll be presenting the former at Stanford at the end of March, bellow is the abstract.

Design Methods to Investigate User Experiences of Artificial Intelligence

This paper engages with the challenges of designing ‘implicit interaction’, systems (or system features) in which actions are not actively guided or chosen by users but instead come from inference driven system activity. We discuss the difficulty of designing for such systems and outline three Research through Design approaches we have engaged with – first, creating a design workbook for implicit interaction, second, a workshop on designing with data that subverted the usual relationship with data, and lastly, an exploration of how a computer science notion, ‘leaky abstraction’, could be in turn misinterpreted to imagine new system uses and activities. Together these design activities outline some inventive new ways of designing User Experiences of Artificial Intelligence.

EuroIA 2017 – Upcoming talk on Implicit Interactions

Very excited to be giving a 20 minute talk on September 30th at EuroIA in Stockholm on Implicit Interactions: Implied, Intangible and Intelligent! Below is my talk abstract.

As our physical and digital environments are ubiquitously embedded with intelligence, our interactions with technology are becoming increasingly dynamic, contextual and intangible. This transformation more importantly signifies a shift from explicit to implicit interactions. Explicit interactions contain information that demands our attention for direct engagement or manipulation. For example, a physical door with a ‘push’ sign clearly describes the required action for a person to enter a space. In contrast, implicit interactions rely on peripheral information to seamlessly behave in the background. For example, a physical door with motion sensors that automatically opens as a person approaches, predicting intent and appropriately responding without explicit contact or communication.

As ambient agents, intelligent assistants and proactive bots drive this shift from explicit to implicit interactions in our information spaces, what are the implications for everyday user experiences? And how do we architect dynamic, personal information in shared, phygital environments?

This talk aims to answer the above questions by first introducing an overview of explicit and implicit interactions in our mundane physical and digital environments. Then we will examine case studies in which unintended and unwanted consequences occur, revealing complex design challenges. Finally, we will conclude with example projects that explore a choreography between explicit and implicit interactions, and the resulting insights into architecting implied, intangible and intelligent information.

Updated Portfolio in Google Polymer

This past week I finally finished refactoring my interaction design portfolio using Google Polymer in addition to adding new projects (Systems of Systems, Burrito, Phygital Party Mode, and Story of Me). Still much work to be continued, including incorporating page routing, improving performance and load time, and better code practices. I’m new to Polymer, thus much to learn.

The reason I’ve decided to use Polymer is the ease at which I can use Firebase. Essentially, though currently disabled as I continue refactoring, I have a portfolio mode called Phygital Party Mode, whereby by when a portfolio visitor clicks this mode, the colors seen in the above GIF are reflected in a Philips Hue light in my London flat. I also think it is a relevant, modern tool to learn independent of my portfolio.

IxDA London – The Uncanny Valley & Subconscious Biases of Conversational UI

The theme of IxDA London’s June event was Algorithms, Machine Learning, AI and us designers – an evening of great discussions that prompted me to dig up reading material on The Uncanny Valley and Subconscious Biases. Both these topics were strongly present, the former directly and the latter indirectly, in Ed and John’s presentation on designing for IBM Watson. They discussed the ‘Uncanny Valley of Emotion’ as a third line on the curve in addition to ‘still’ and ‘moving’ in the traditional model of the uncanny valley. While I understand their intent in creating a third category – accounting for the invisible systems, agents, and interactions not visible or physically accessible – in retrospect I disagree with the characterization. Emotion, or lack of, can by explicitly betrayed by movement. From my understanding, subtle asynchronous or unnatural movements directly related to emotional responses expected by humans are a key ingredient in the Uncanny Valley. Therefore, I would rename the ’emotion’ curve suggested by the Watson team to ‘implicit,’ thereby retaining emotion as a criteria for both explicit (still and moving) and implicit interactions.

The second subtopic, subconscious biases, greatly concerns me. A recent article in the New York Times – Artificial Intelligence’s White Guy Problem – sums it up perfectly. As designers, how do we build into our processes accountability for subconscious (and conscious) biases relative to algorithms, machine learning, and conversational interfaces? I don’t have an answer but I would like to find one!

Relevant links and resources:

The Uncanny Valley

Uncanny valley: why we find human-like robots and dolls so creepy

Navigating a social world with robot partners: A quantitative cartography of the Uncanny Valley

The Uncanny Wall

Artificial Intelligence’s White Guy Problem

UX Process Checklist

At the beginning of 2016, inspired by a fellow colleague who posted this great UX Process Checklist, as a side project I worked with colleagues to put together our own UX process proposal as part of an ongoing effort for team process development and sharing.